As I was typing this sentence (on Sunday), something caught my eye from the window. It was a small rabbit, or should I say large bunny, bounding across the lawn. I’m writing this time from the second floor bedroom, on a desk in front of a long rectangular window that allows me to look out over our humble kingdom. From this perch I can gaze out over the yard and – wow, there goes another bunny! That one was not bounding, that was a hurried scamper. A comical scamper. Boy those things can move quick, can’t they. I don’t think that was the same one, I would have noticed it come back across the yard. Same size though. Could be siblings. Could be twins. I guess they’re all kind of like twins, aren’t they, because they all come out at the same time. Twins, triplets, quadruplets. There’s a word for this – littermates. Yes, littermates.

This is extremely stream of consciousness. You’re right along for the ride with me here. I can see all of these things from this window, and more, because I can see the feeder from here. And the lake. I should say, the feeder complex. I have been here for the various stages of this aviation feeding station’s development, and would say that we can now officially call this a complex, the most recent addition being an oval-shaped mulch patch with African Lillies, for the hummingbirds. They like those African Lillies. Here’s a photo, courtesy of the internet, of what they look like.

In the last paragraph, I wrote, “oval-shaped”. When I wrote that sentence, I first wrote ovular, you know, like circular, or rectangular, but it immediately struck me as sus, and my intuition was correct. That word is already taken. For things related to ovules, of course. The English language is weird. The other day we were watching soccer and I said something like, “She’d just shotten the ball” and the parents stopped me and said, “Shotten??” Got, gotten, fine. Shot, shotten, no sir. Gotten is still alive in the common vernacular but doesn’t have to be used (I just got home, I’ve just gotten home), but it might go the same way as shotten, and die out someday. Because, I just did some Googling, it’s not that you can’t say shotten. It’s not incorrect, it’s just a dead word, listed by the dictionaries as obsolete. Once upon time it was used, if we can trust this nice graph from Collin’s Dictionary, some time in the 1700s, and who knows how much before then.

Anyways, back to the African Lillies.. Ours are yellow and orange. They’re dainty things. So now we’ve got some of those below our feeders, of which we have four hanging from two metal poles, that are four feet high or so, and one hanging from a cottonwood next to the mulch oval. From one pole, there are three smaller feeders: one with the sugar water for the hummingbirds, with little fake flowers for them to stick their tiny beaks into, a standard one, we’ll just call it that because I can’t really tell what’s going on with it from this angle, but it looks similar to the feeder hanging from the cottonwood, which has a little ledge in front of it that the birds and the undesirables (the squirrels and the chipmunks) perch on and pull seeds out through a slit in the bottom, and then there is a sack of smaller seeds, with a thin sieve-like mesh skin, that is favored more by birds with skinnier breaks. I’m thinking that the nuthatch might go for this one, and speak of the angel, the nuthatch has just landed. The hummingbird has just shown up as well. It’s a whirlwind out here. At this moment, I can see these birds: a female cardinal, four, then six sparrows, a hummingbird, a nuthatch, a few geese, far off, and some other kind of sparrow, or maybe a chickadee. These guys n’ gals are out here partying every day. Attached to the sack is a small bowl with jelly for the orioles. They were around earlier in the summer, with the red-winged blackbirds. They’ve both gone away now. Hanging from the other pole is a massive multi-storied megafeeder. This is monopolized by the sparrows. There is currently a sparrow at every feeding port, and they’re fighting to keep it that way. The nuthatch keeps trying to get in there. He flies back and forth, looking for an angle, a way in. He finds it, or forces it, gets a few seeds, and is chased off. He’s my favorite of these birds, I have to say. Something about the way he hops and skips, the way he swivels his head, and pulls seeds out of the feeder with his long, sharp beak. He trawls the sides of the cottonwoods, poking and prodding, snapping juicy morsels up out of the cracks, and possibly hiding seeds. I read that birds do that, wedge seeds into the cracks of trees. He’s got a very pretty blue, grey, white, black coloration. A lot of personality in that bird. He could be a she, I actually don’t know. Another hummingbird has just shown up as well. It’s now confirmed that there are two hummingbirds around.

A lot of action going on down there, man. You could watch it all day, especially if you were a cat. From here would be great, but from our downstairs window, a large, three-paned window with a fullscreen view of the feeders. That view is every cat’s dream. Cat heaven. And Daisy heaven is looking at fish. It doesn’t take much, with them. I was sitting out on the deck in the rain yesterday, right under the ledge of the house. I was only being sprinkled on. It was a soft rain, the temperature was cool, but a very comfortable, perfect cool, not chilly, and with low wind. It was just quiet, but not unsettlingly quiet, not dead silent, just quiet, with only the gentle white noise pitter-patter of the drops, on wood, water, and leaves. And with the fresh scent in the air, the fresh scent of earth, of wet wood, of rainwater. Daisy was out with me, laying beside me near the steps, staring off into the distance, out between the large trunks of the cottonwoods, at the geese in the yard. I sat there, watching her, watching the ripples of the water on the surface of the lake, watching the sky, watching the geese, and in that moment, so full of calm, my senses so pleasantly stimulated, a little thought popped into my head, that this was heaven. It was a fleeting thought, really. But it was a solid one. I wasn’t out there for too long before I felt restless, and I didn’t stay. For that short time, though, I guess I had a little taste of it. A brush with the divine. And you know, it really doesn’t take much. It doesn’t take much, to be happy. And it doesn’t have to cost a dime.

Now it’s Monday. Enough talk about the birds and the wind and crap like that. Let’s get down to business.

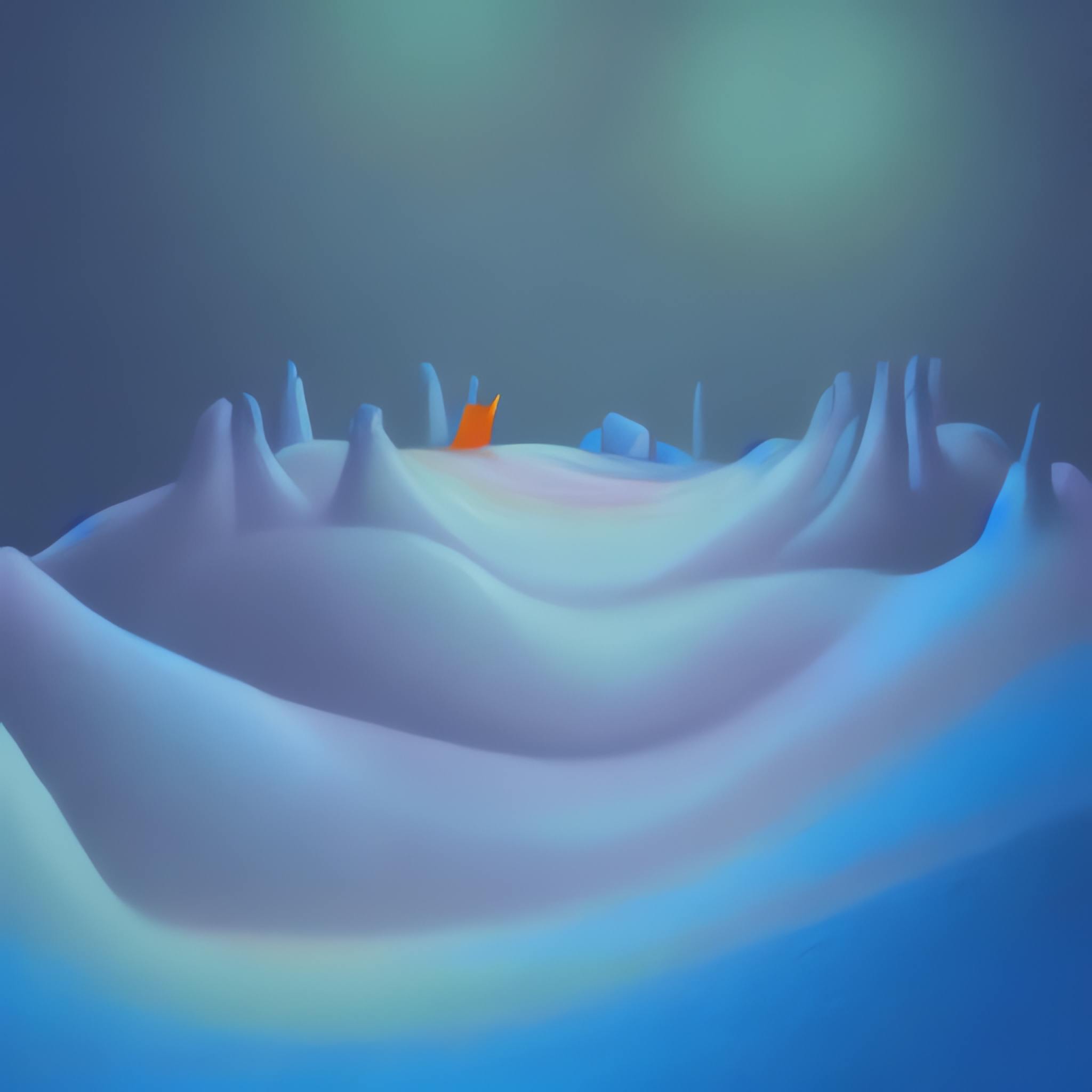

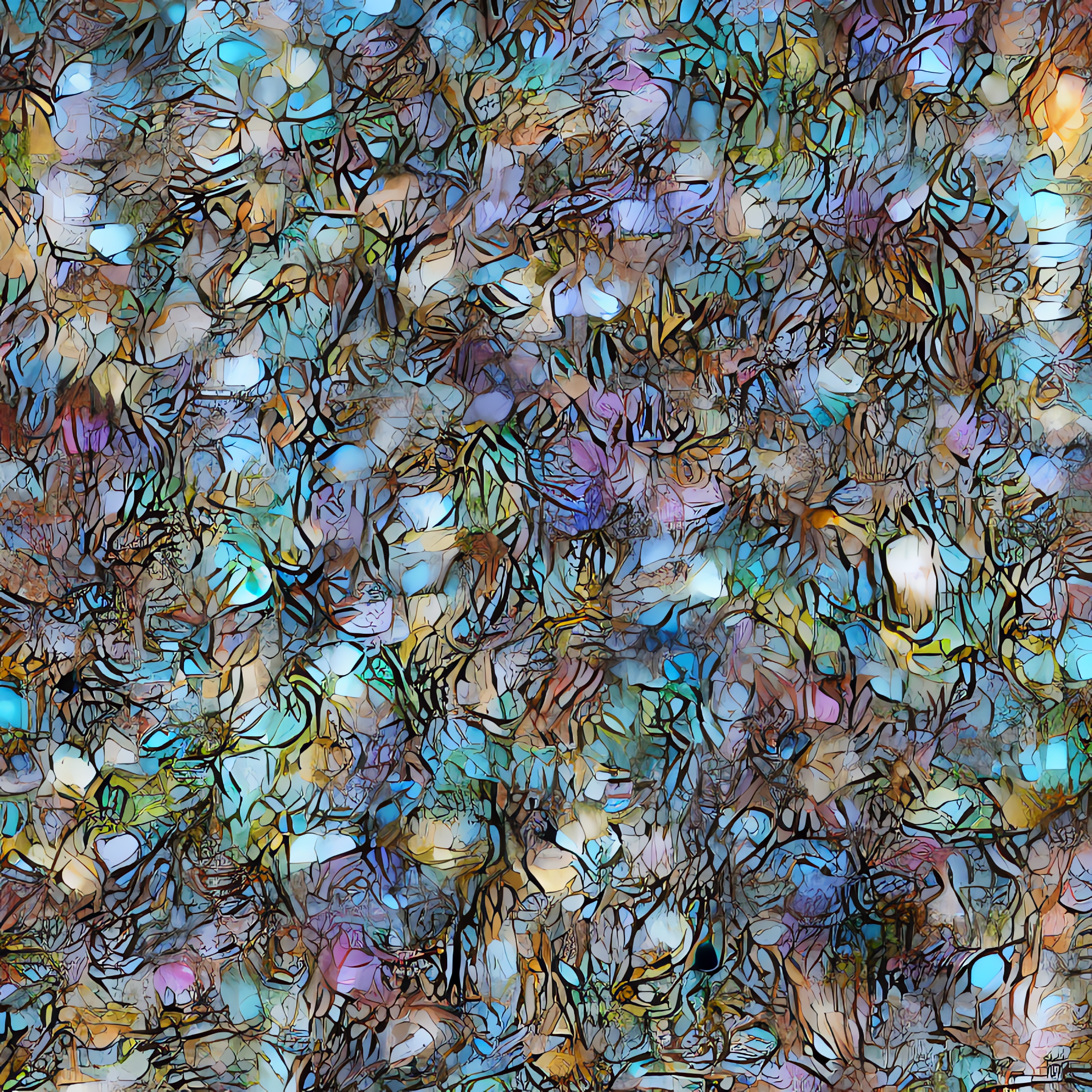

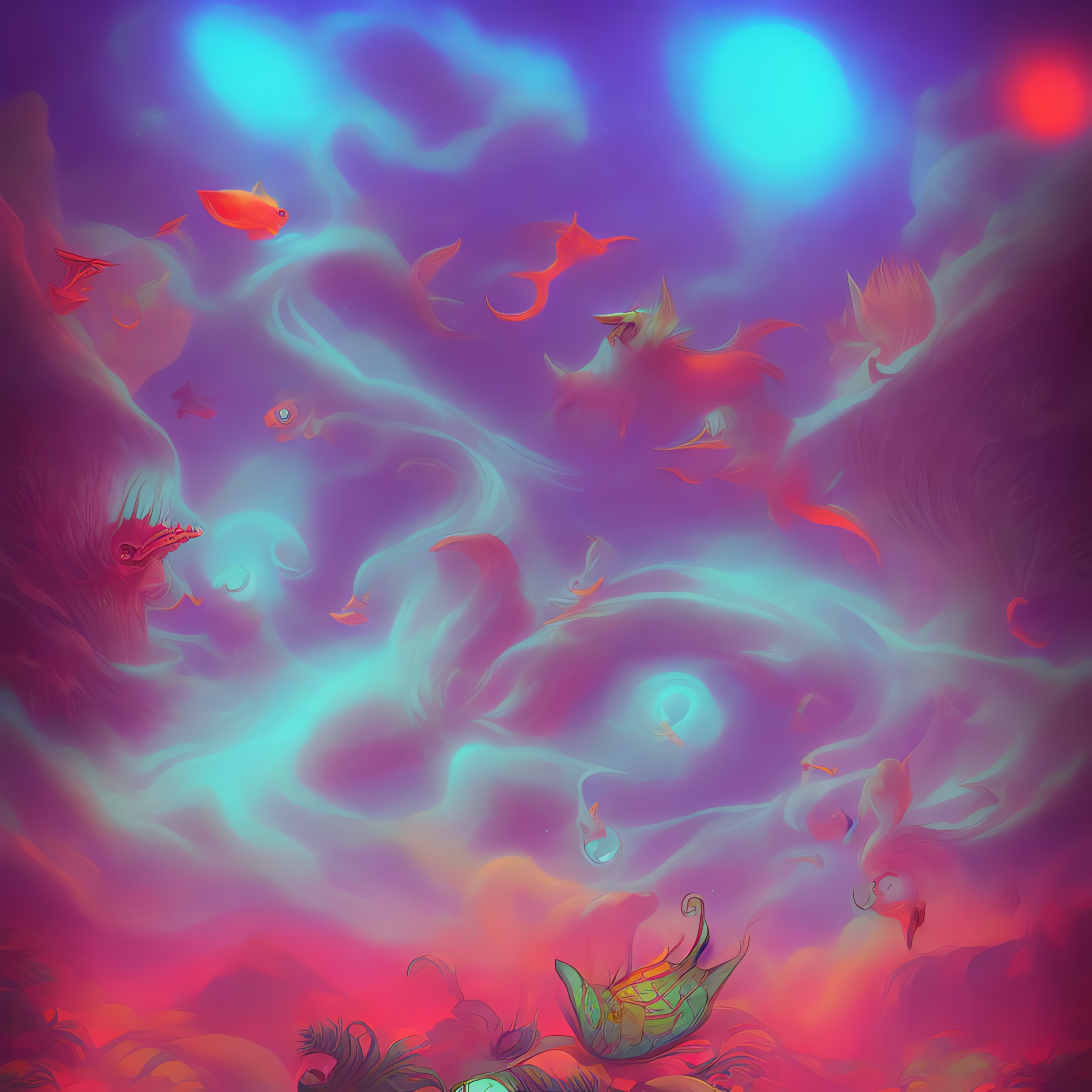

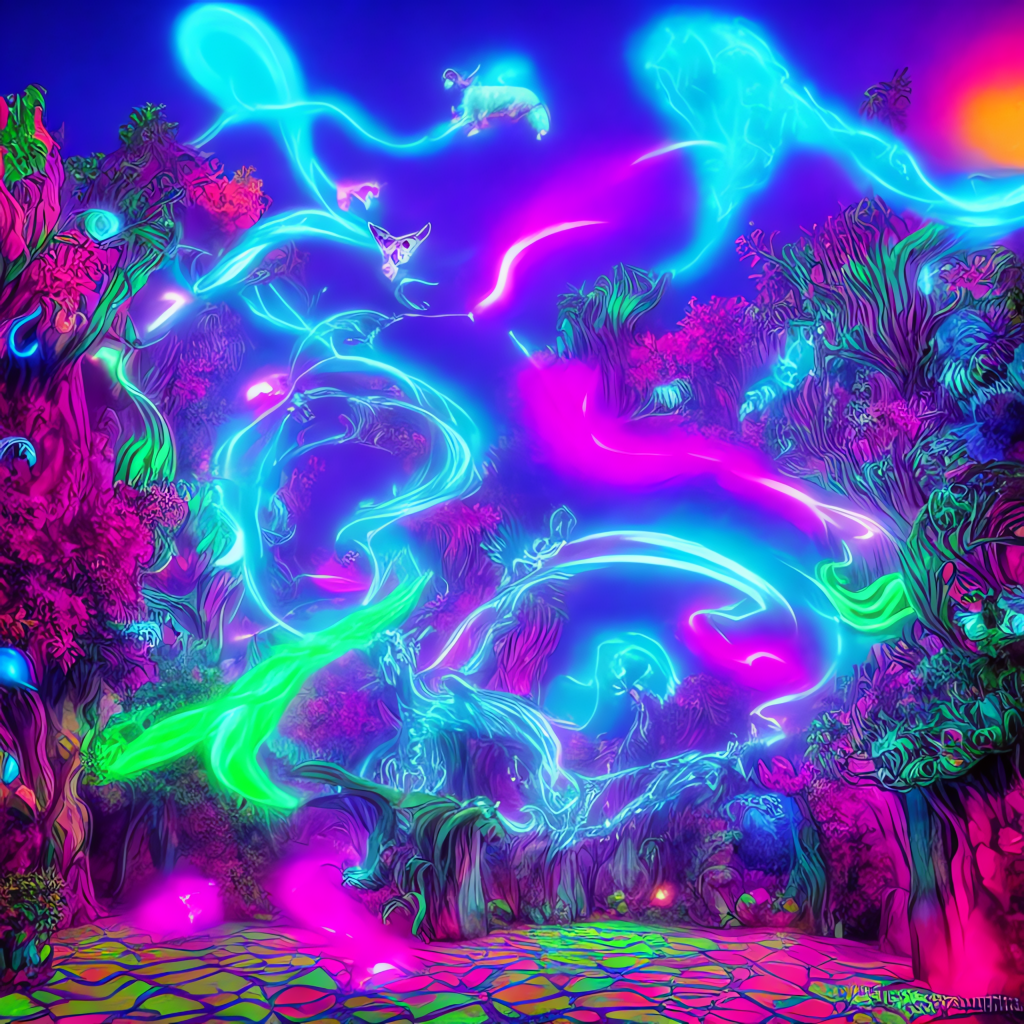

The text prompt for this image was “Creatures from a phantasmagorical universe, Pastel Art, Beautiful Lighting, Warm Color Palette.” And this image was built in 22 steps. Last post looked at the effect of step count on image generation, and now we’ll talk about the effect of prompt text and seed number. First, the seed number. Like an actual plant, the seed is the basis for the image. How exactly it works I don’t know, but I can tell you that if you use the same seed for an image, even if they come out wildly different in the end because of all of the other parameters, they must have started the same way. So, if you generate an image twice, keeping all parameters the same, including with the same seed, you will have nearly the same image in the end. If you keep all parameters the same and change only the seed, you will have an entirely different image in the end. The seed for that first image, our experiment image, was 54445. Below are images generated with seeds 54446 and 54447, and otherwise the exact same parameters.

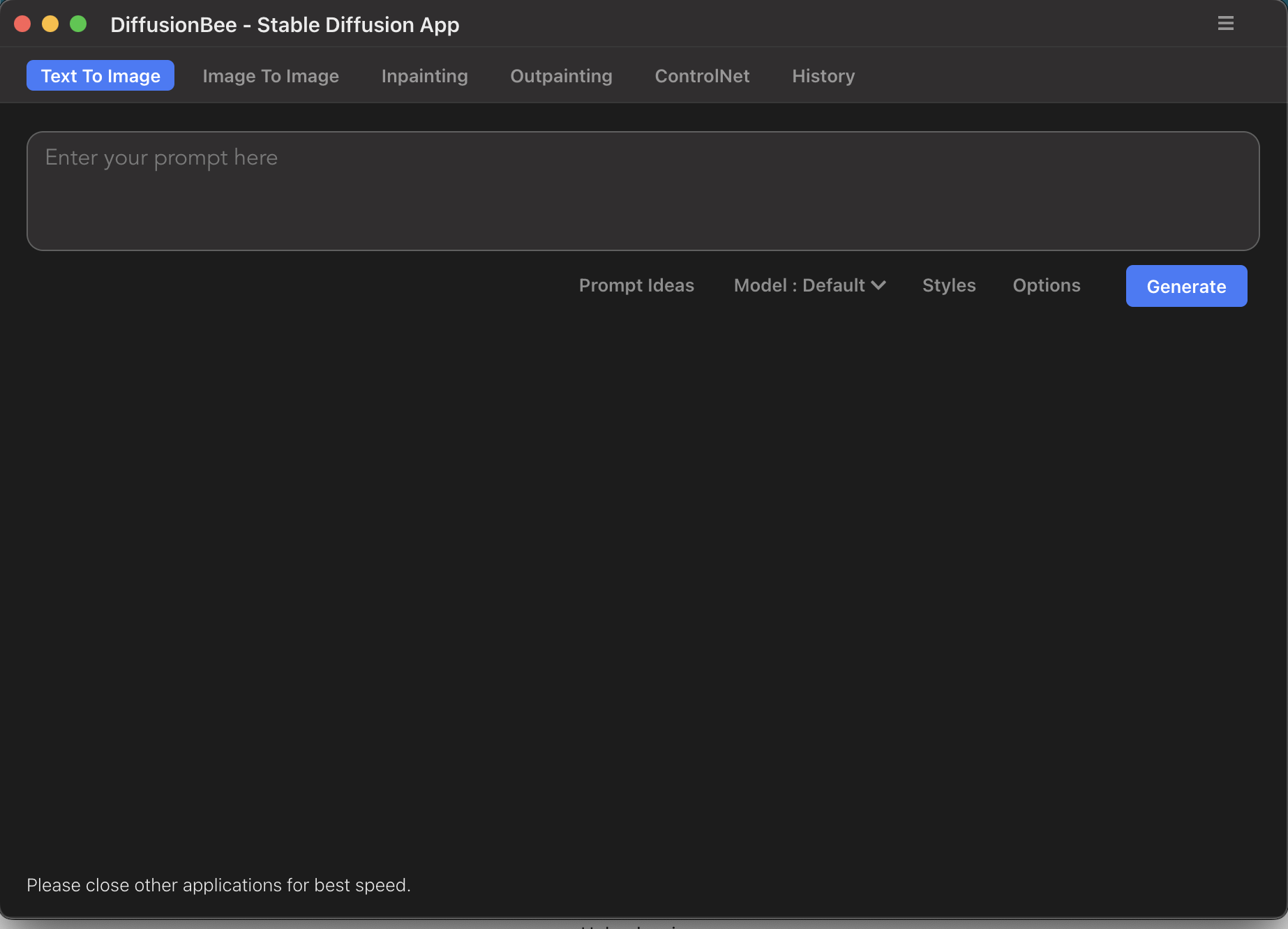

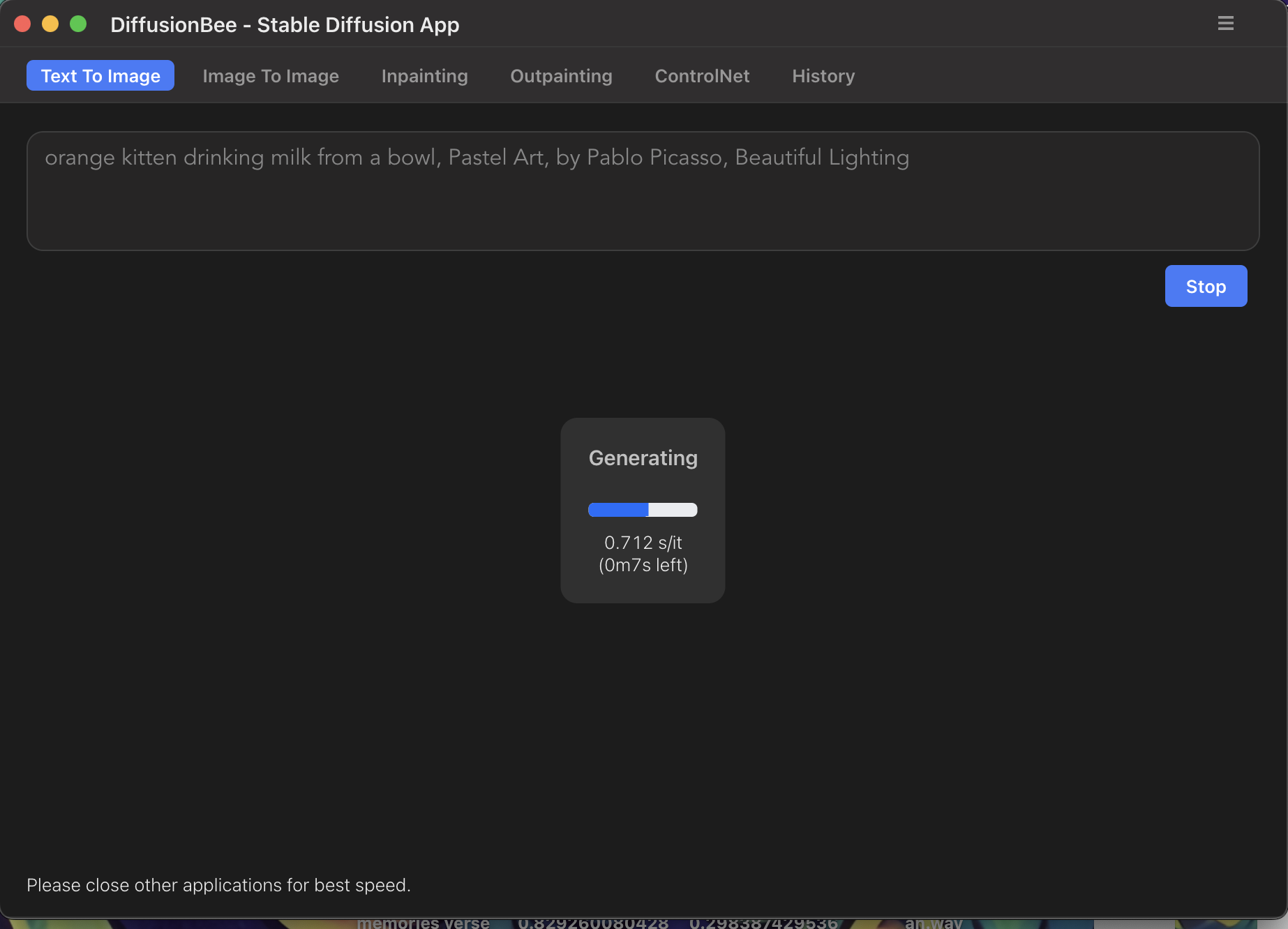

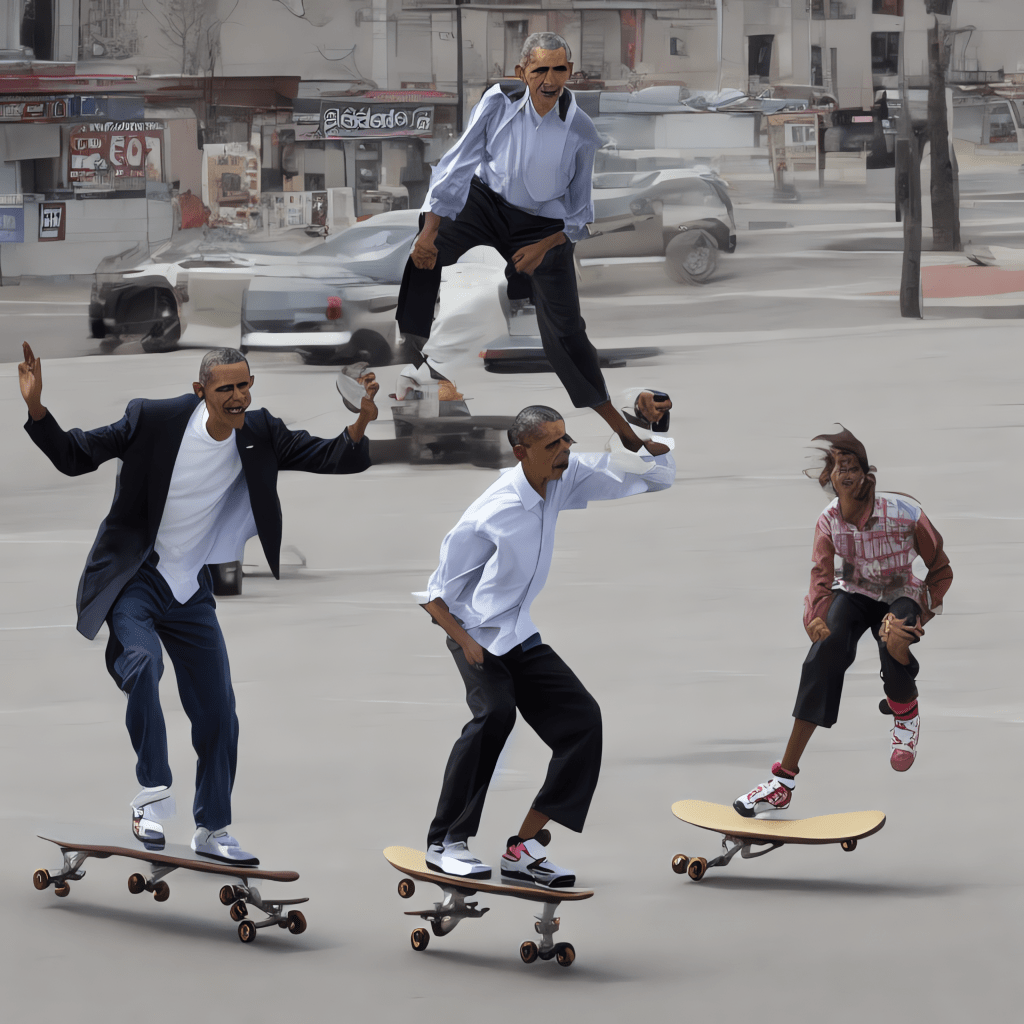

This means that you could download DiffusionBee, set all of the parameters to exactly what I had them as for these images, and you would get nearly the same thing. You don’t get exactly the same thing, because the algorithm that generates these is as they say in the biz, nondeterministic. (Also.. how freakin cool are these pictures. I think I could have a promising career as a Phantasmagorian AI Art Programmer. Wouldn’t that be fun to tell people.) It would be interesting to know what exactly a seed is in the code, how that works. I’m trying to think of what it could be, like a set of numbers or parameters that are related to the growth of the image. I generated three more images with totally different text prompts off of the same seed, to see if that would reveal anything about the seed. 1. “Gorilla in a top hat, by Vincent van Gogh”, 2. “a bowl of cereal, colored pencil, children’s drawing”, and 3. “Barack Obama riding a skateboard, 8-bit”.

I can only really see one similarity between them. All of these images have multiples of the subject. I’ve wondered about that, because sometimes there are multiples, and sometimes not, and it doesn’t matter if you specify how many gorillas you want in the prompt text. That may be outside of the prompt’s control, and dependent only on the seed.

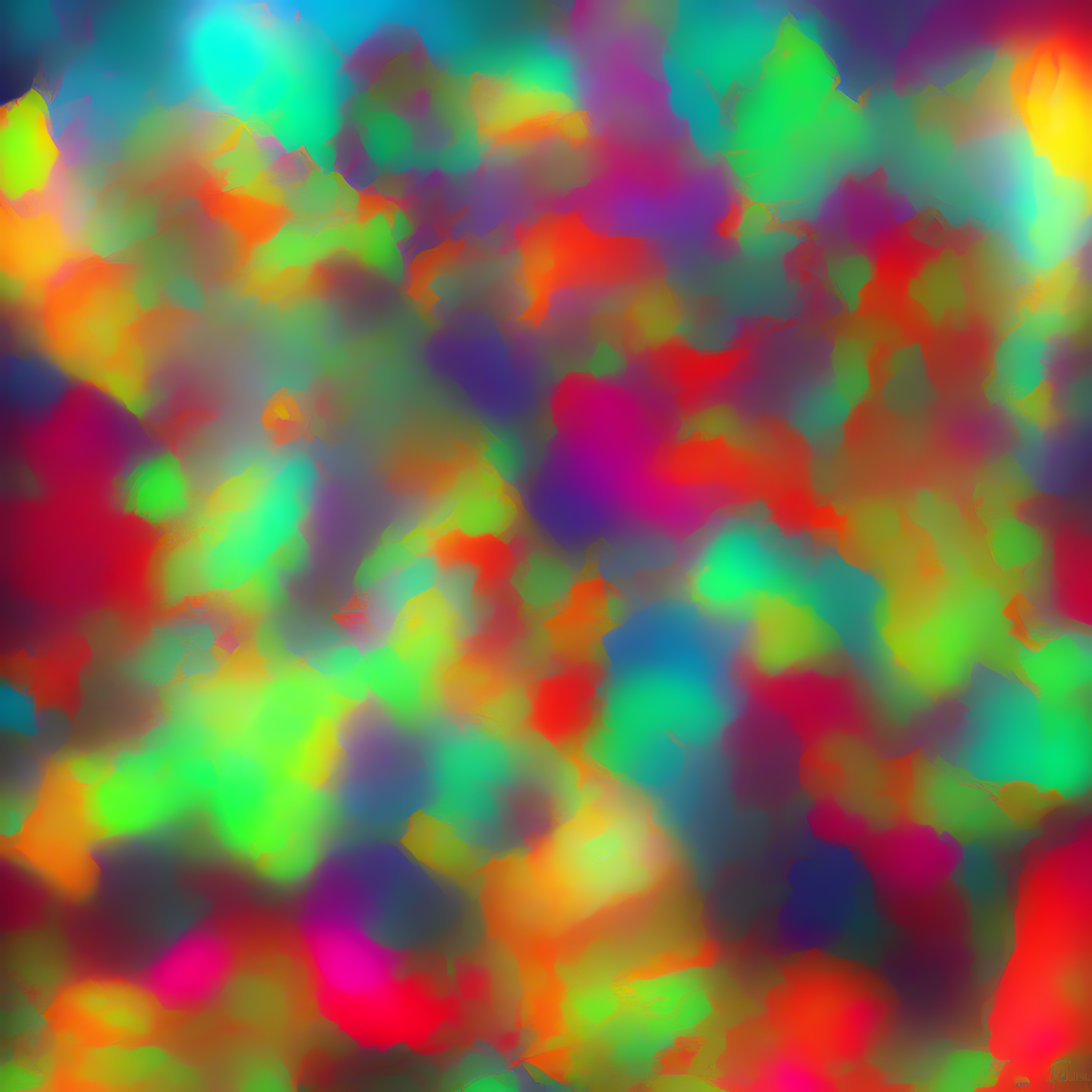

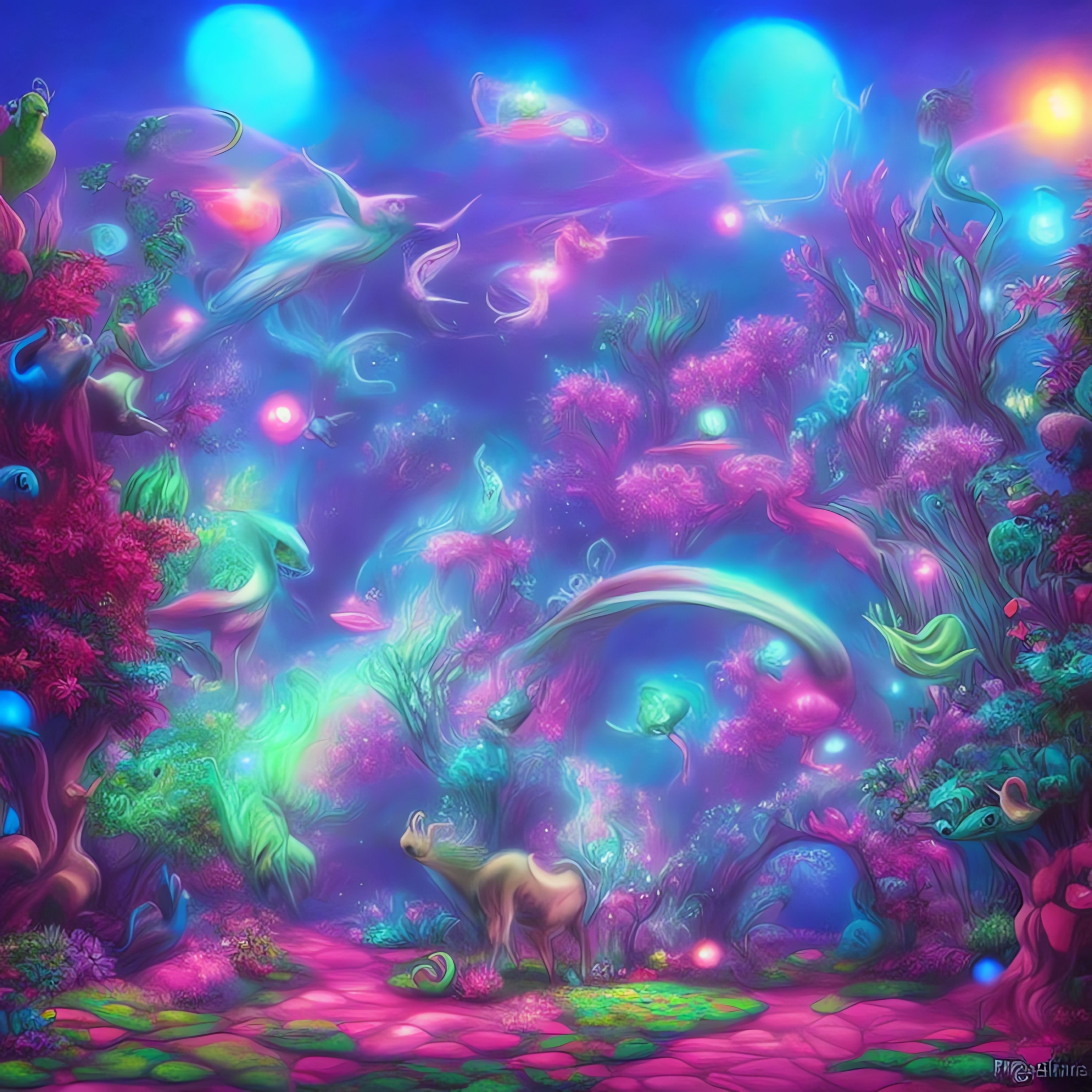

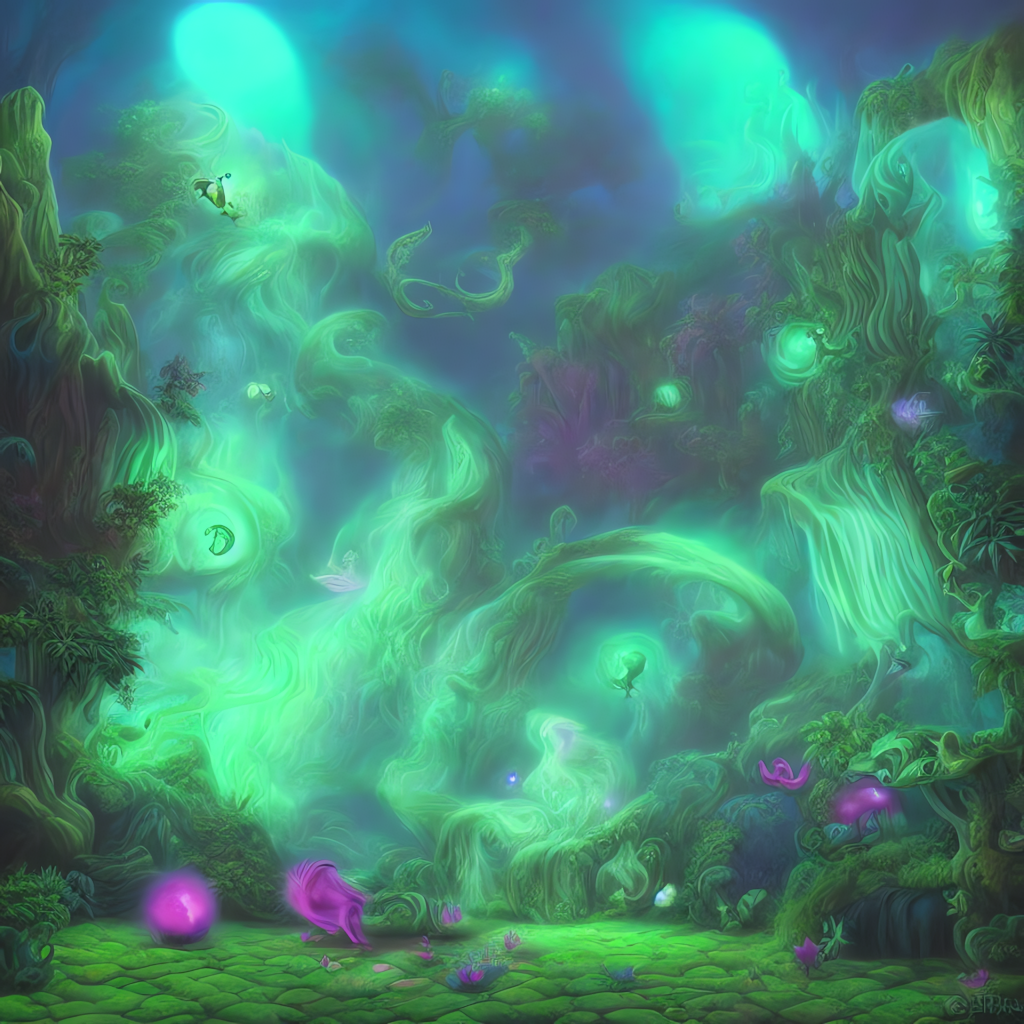

Now looking at the effect of prompt text. In the next image, I changed only one thing. In the prompt text, I changed “warm color palette” to “cool color palette”, and now you have an image that is in one way quite different, and yet similar. Take a gander.

Many differences, and many similarities. You can see that the bones of the image are the same. That’s really where the seed is coming into play. The bones are the same, but the flavor, the details have changed. There is much more of a pronounced glow to the image, which I really love. The whole thing is glowing in mystical blue light. All of the flying fish are gone, and the firecat, the little glowing mushroom lamps, and the red sun in the upper right corner, gone as well. In the cool color palette, you have more detail in the background, less of a foreground (on the sides of the image), and now a really interesting scene at the bottom, with an incredible pink-purple boar creature, and a large, curly, pink monkey. There are new plants, and some yellow thing that my brain is interpreting as a butterfly. Would you expect such a different image just from asking the program to change the color scheme? I didn’t. I thought it would take the same image and just color it differently, but it’s much more than that. I had a lot of fun trying other color schemes and styles and seeing what popped out. Like the chocolates in a box of chocolates, you just don’t know what you’re going to get.

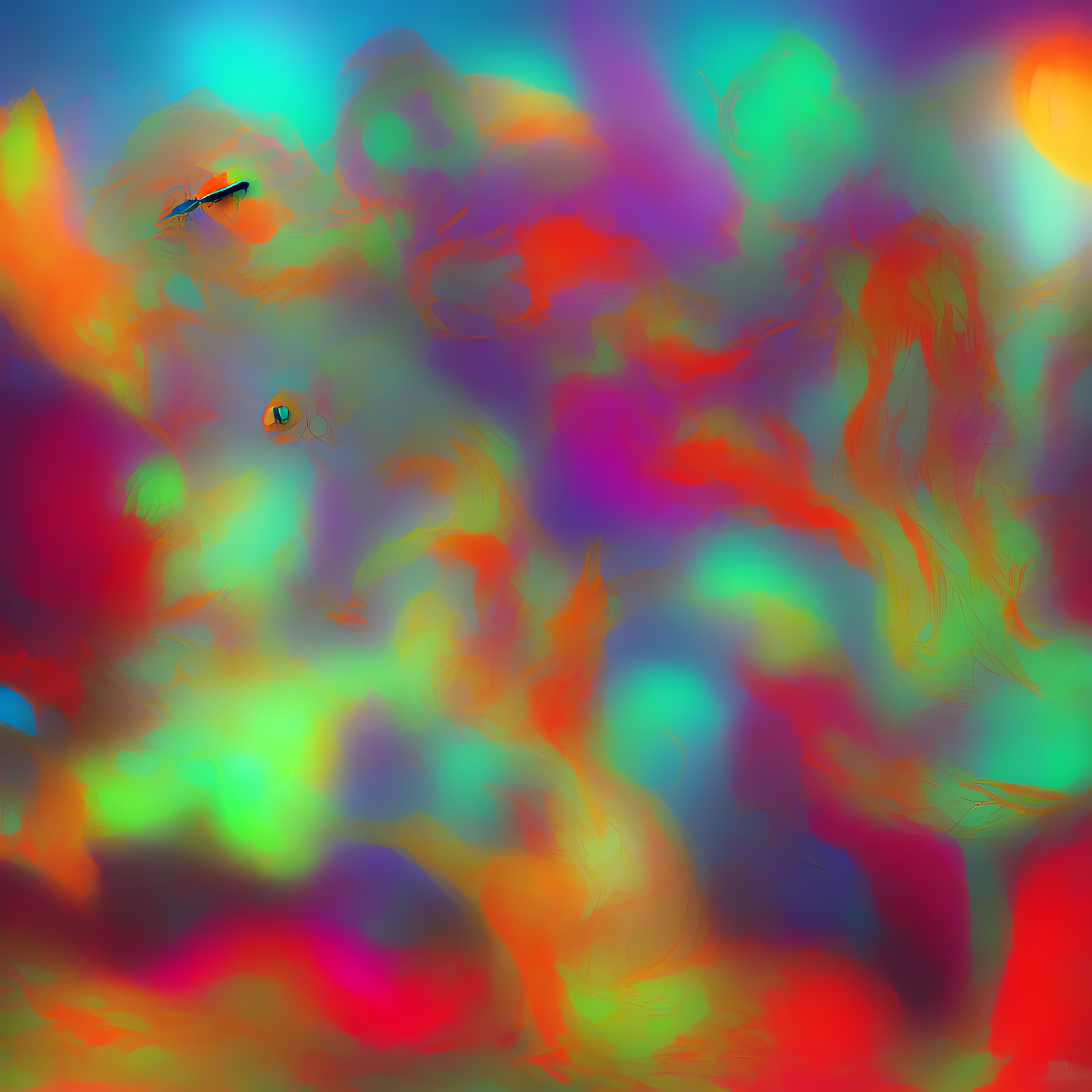

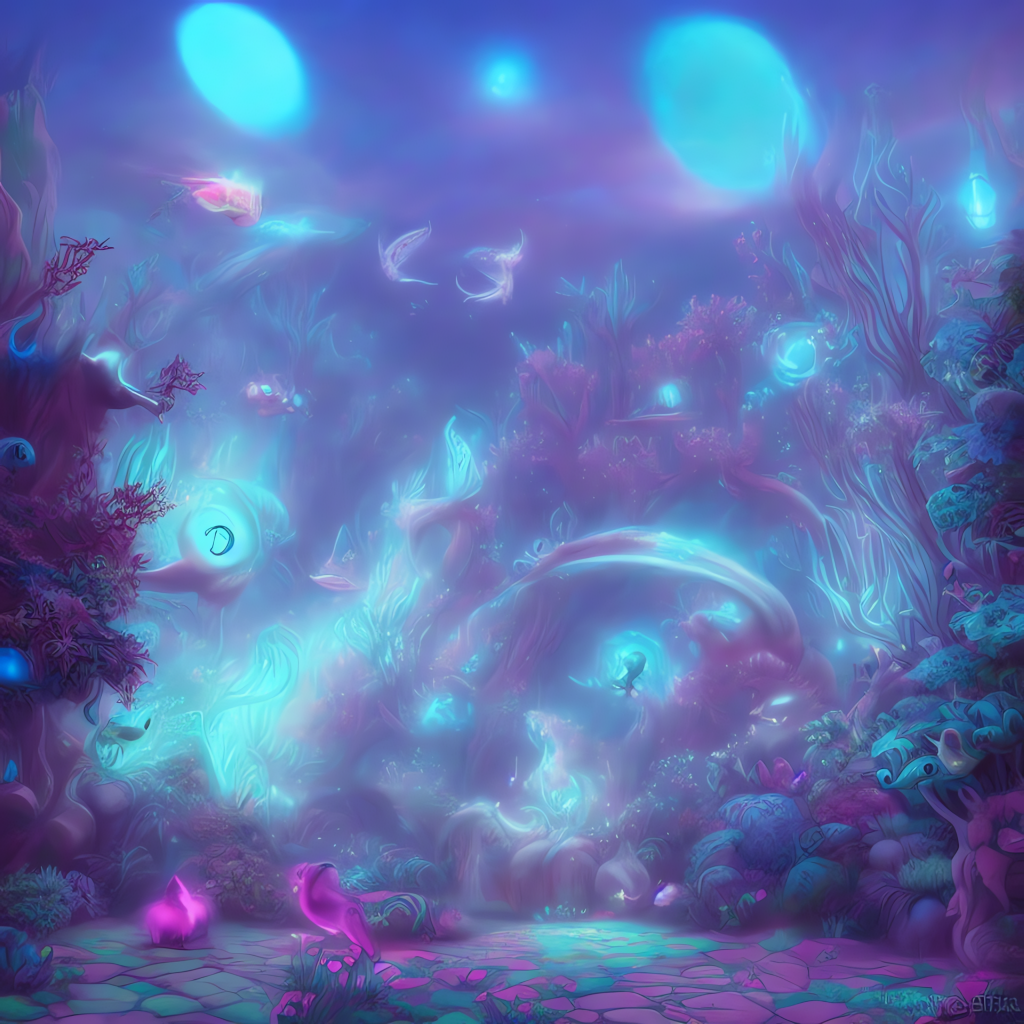

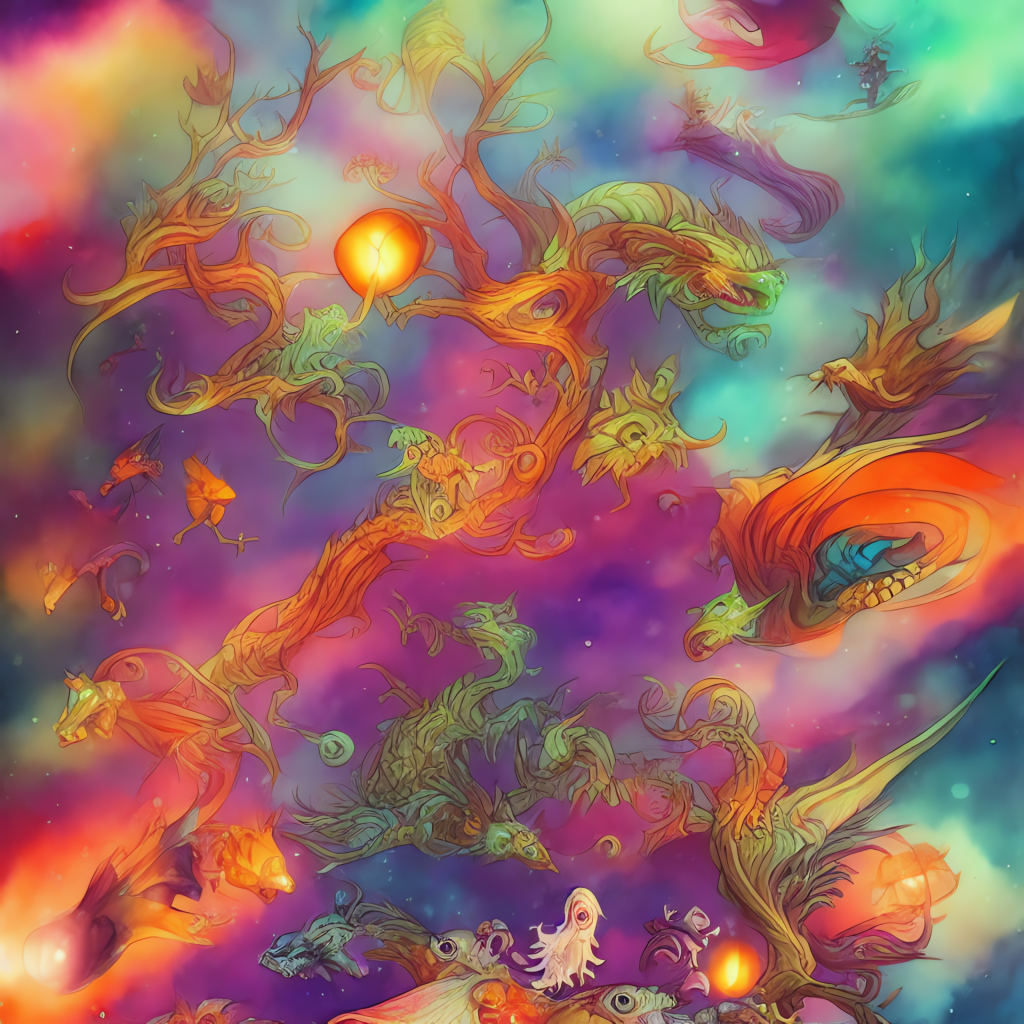

They all have the same foundation, but the aesthetic is totally different. So how about changing something else, say, “Watercolor” instead of “Pastel Art”?

Amazing. So amazing. Look how the branches of the tree on the bottom right become the hair of the green rhino pokemon creature. The leg of the firecat becomes the leg of the dragon whatever. (I’m trying my best to describe these phantasm creatures to you. It’s hard, ok. I could make up names for them. The Wakkanok, the Schmerkelvitz.) The background just disappears and becomes stars, and the foreground is made of creatures, and colored gas. Now we really are out in the universe. I love it.

This one was “creatures in a phantasmagorian universe, Pastel Art, Cool Color Palette” but without “Beautiful Lighting”. That made a huge difference. I’ll take my beautiful lighting, please.

What if we change “universe” to “desert”?

Incredible.

Some of the best, here. On 10 steps, we could more creatures. I love the blurry, dreaminess of the watercolor.

Very cool. I’m really in love with these. You just never know what you’re going to get. So much to play with here, with DiffusionBee. This is a very simple program, no coding required, no importing models or anything. Also, they have AI video now, I’ve seen it. A full movie trailer, 30 seconds live action, apparently made with AI. Think of the implications. We could, potentially, the average person, easily generate hundreds of videos of penguins riding horses. Into battle, at the Kentucky derby, joyously through a meadow, along the beach. This is coming, this is the future. It’s exciting stuff.